When performing purple team exercises, it is often desirable to simulate attack techniques featured in threat intelligence. If a specific threat actor is a likely threat to the organization, then focusing on the tactics, techniques, and procedures (TTPs) they’ve been observed using and/or TTPs they are likely to use makes sense for determining the efficacy of the organization’s security controls against that actor. However, ready-made attack plans for that actor may not be available, so analysts should learn how to develop their own intel-based test plans. In this blog, we will outline our process for developing attack plans from threat intelligence, which covers scoping intelligence collection, collecting reports, analyzing reports for TTPs, then assembling an attack plan and related resources.

Targeting and Scoping

Whether or not a specific threat actor is likely to target an organization is generally based on one or more of the following criteria:

- Actor has targeted other organizations in the same industry vertical

- Actor has targeted other organizations in the same geographic area

- Organization’s work products overlap with actor’s strategic objectives (e.g. IP theft)

- Organization uses technology affected by recent vulnerabilities (opportunistic attacks; example: a recently published PoC exploit for a commonly used VPN appliance)

- Trusted third-party analysis suggests a likely threat (e.g. an intelligence agency releasing an advisory for members of a target group)

Note that while some organizations need test plans focused on a single threat actor, you can also develop a more generalized plan based on wide-scope threat intelligence to get coverage against common TTPs, such as with our shared Threat Index test plan.

Once a likely threat actor has been identified, the next step is to locate timely intelligence data. A good starting point is aggregation resources like Malpedia and ETDA, the various security-related subreddits (r/purpleteamsec, r/blueteamsec), community threat feeds (such as for MISP), and, if applicable, commercial threat intelligence platforms and industry ISACs. When selecting threat intelligence reports, we prefer 1) recent reports and 2) reports written by the same reporting agency.

Timelier reports are important as threat actors often change and improve their TTPs. The more recent the information, the more likely the observed TTPs are still applicable. Note that timeliness here is based on when the TTPs were executed, not when the report was written.

Reporting agencies do not use the same criteria for tracking threat actors, nor do they necessarily have access to the same raw incident data as one another. This, along with there being no standard threat actor naming/reporting standards, makes aligning reports about (purportedly) the same actor difficult across agencies. Where possible, it is better to stick to the same agency to avoid these issues; though it is unavoidable in cases where there is a dearth of intelligence.

We then filter the initial list of reports for the actor to only those that contain actionable data. This means cutting out reports that are more so high level, such as advisories, executive summaries, etc.

Intelligence Review

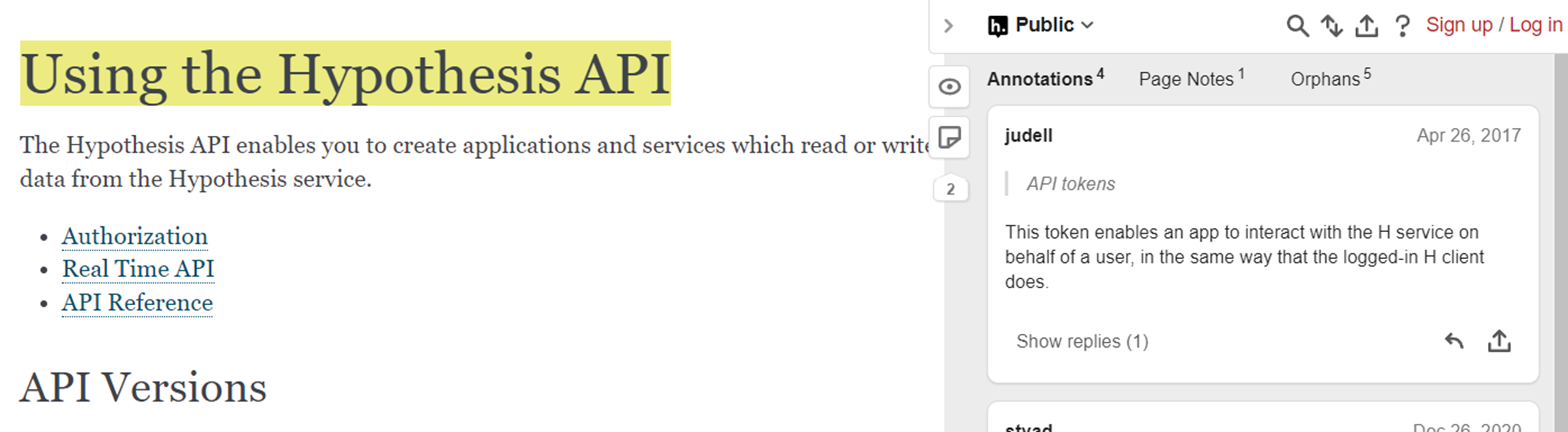

The goal of reviewing intelligence is to extract the TTPs used by the actor then track that information in some useful way, such as a spreadsheet. We use an open-source project called Hypothesis. Hypothesis provides a browser extension to expose an annotation pane on every page a user visits that allows them to both add annotations to selected text as well as view community member annotations.

Hypothesis annotations for a public website

The default Hypothesis clients use a shared public server, but you can also deploy it privately to secure data and manage access as desired. For single user workflows, there is an Obsidian editor plugin based on Hypothesis that works locally: https://github.com/elias-sundqvist/obsidian-annotator.

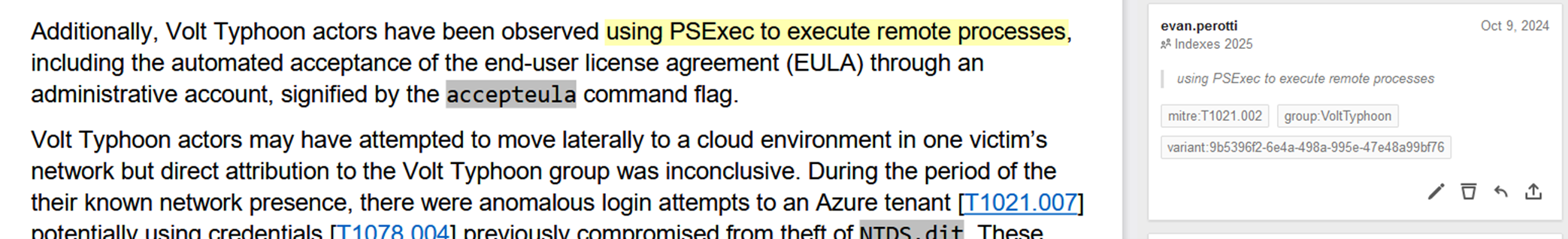

Annotating attack TTPs and adding additional metadata in Hypothesis

The primary benefit of an annotation-based approach to analysis is that it can be done in-line with the information, allowing an analyst to retain provenance of the attack details. This is useful for reviewing the analysis since the reviewer can see the details that led to the annotation. It’s also useful during plan development since you can see the context of the attack details when developing the test cases.

Plan Development

When all intelligence is reviewed, the extracted information can then be used to develop test cases. How you store test cases will depend on how you plan to execute the tests. We use the open-source Market Maker to manage our test case content, so we develop new test cases in accordance with its schema.

The general workflow we follow for converting the TTP information into a test case is as follows:

- Filter out any items that lack sufficient information for inclusion

- For example: if a report notes that the threat actor dumped credentials from LSASS but omits details about the dumping mechanism and the tools used, we generally omit that technique from the final plan.

- (For each test case) Review surrounding context for the original annotation(s) to identify the intent and specifics of the test case

- Generalize the procedure, where applicable

- Variations of a given procedure do not necessarily introduce a material difference for a test case. We generalize procedures to a common form that is used for all similar procedures. For example: If a report notes several variations of a threat actor creating a scheduled task with

schtasks.exe(e.g. running hourly vs daily vs on a set schedule vs as an admin) then we will include one representative, common-denominator procedure for the plan.

- Variations of a given procedure do not necessarily introduce a material difference for a test case. We generalize procedures to a common form that is used for all similar procedures. For example: If a report notes several variations of a threat actor creating a scheduled task with

- Create a test case file if needed

- Many TTPs noted in reports are commonly seen across reports. We store all test cases in a shared library, independent of their origin, so that we can reuse test cases that align with the observed TTPs instead of developing new test cases.

- If the test case requires any infrastructure, payloads, special requirements, and/or additional research, note that down for follow-up activities

- Add the test case to the test plan

Once the base plan is in place, we will then perform any required additional filtering on the contents to balance it for the desired use. For example, if we are trying to develop a plan with a good balance across MITRE Tactics, we might cut test cases from Tactics that contain many more test cases than other Tactics. This type of filtering requires a gentle touch so as not to remove items that are otherwise important for providing appropriate coverage for the threat actor.

Test cases that make the final plan may also require additional resources for their execution. Depending on the requirement, we may develop:

- Infrastructure: Update project automation modules (Terraform, Ansible) for deploying required infrastructure

- Payload: Develop new payload and/or use open-source options then integrate into payload creation automation tooling (e.g. pdcd).

- Additional execution details (e.g. setup, prerequisites, etc): Write guidance documents to supplement test cases.

The final plan can then be imported into the platform of choice (e.g. VECTR, a BAS tool) and/or used as the basis for additional development (e.g. attack automations).

Attack plan summary in Market Maker’s Darkpool viewer

If you are interested in getting started with attack plan development, you can use the quick start guide in the Market Maker repo: https://github.com/SecurityRiskAdvisors/marketmaker/blob/main/docs/Quickstart.md

If you have any questions or concerns, feel free to reach out to @2xxeformyshirt.