Every day, organizations are finding new uses for AI models. As with any transformative technology, AI introduces both risks and opportunities for businesses, and organizations should be prepared to protect their AI technology at the same level they protect traditional “crown jewel” or other sensitive data. Senior leadership will look to CISOs for guidance, both on how to protect AI and how to use it to enhance the security of their organizations. What follows is some guidance on how a CISO might proceed as AI technology evolves and is deployed.

CISO Scenario: Microsoft Copilot

Sensitive Data Exposure to Employees

Existing SharePoint and OneDrive user access controls can be over-permissive. This can result in employees accessing data not intended for their use.

This risk is not new, but exacerbated by the ease with which AI can correlate, analyze and present the data back to the employee.

External Connections

There are two options for connections, read-only graph connectors and read/write plugins. The connectors and plugins respect the authorizations defined by the external application which stresses the importance of strong access controls everywhere.

This risk is the same as an external API which performs functions in an application.

No Out-of-the-Box Alerting Available

Existing SharePoint search alerts do not currently work with Copilot.

Threat actors with access to an employee account may have an advantage in gaining access to sensitive data compared with previously established techniques.

Copilot queries and results are only currently available in a forensic eDiscovery context.

Use Purview sensitivity labels to classify and encrypt documents containing sensitive data, and restrict access. Labels can be applied automatically and retroactivity with Purview. Accuracy is understandably a challenge and tuning needs resources.

Syntex is a $3/user/month add-on that can help manage SharePoint permissions, but will require significant effort.

DATA LOSS PREVENTION

Microsoft Purview or existing DLP controls can and should be used to retroactively and temporarily limit access to documents that have overly permissive access controls.

METHODICALLY ENABLE SHAREPOINT SITES

The semantic index is queried when a user submits a co-pilot search. Excluding SharePoint sites from Microsoft Search and the semantic index will prevent Copilot from returning results from those sites regardless of permissions on the files within those sites.

CISO Scenario: Internal LLMs

Training Data

Internally developed AI training sets can quickly become “crown jewels” of the organization.

Employees with overly permissive access or threat actors with malicious intent may alter the training data resulting in bad model or one that produces incorrect results.

Training data can also contain PII, ePHI and other sensitive business IP.

LLM Access Control

Traditional access controls can help prevent unauthorized access to production internal LLMs.

This is not a new risk and purpose-built LLM’s should be limited to individuals and groups that require access via your existing IAM controls.

Dev Environments

The ease of which a new LLM can be created and deployed will lead to LLM sprawl and the use of unauthorized LLM usage within business units.

Consider this risk like “shadow IT”. Providing approved architectures, environments and tools will help curb this.

Continue to educate your employees about the risks associated with public LLMs and the importance of safeguarding sensitive data. Training should include how to recognize and handle sensitive information and the consequences of policy violations.

REPOSITORY SCANNING

Establish and enforce a policy that bans the use of data sets in code repositories. Establish regular scanning of inventoried repositories for configuration violations, the presence of data sets, and other sensitive data, such as credentials. Look to establish regular OSINT scanning for repositories that should not be public. Leverage inventory data to communicate alerts back to asset owners.

CISO Scenario: Public and Third Party LLMs

Accidental Sensitive Data Exposure

LLM’s allow for file upload; however, the current likelihood of a large data set that a public LLM could train on is minimal due to the size of complete data sets and public LLM current limitations.

Leaking incomplete data sets is a more common scenario but can be limited by implemented appropriate firewall or proxy blocks. Currently, ChatGPT has a 10GB-per-day limit on file uploads.

Malicious Sensitive Data Exposure

The existence of publicly available LLM’s does not significantly change the threat profile for organizations, except those creating sought-after private AI algorithms.

A threat actor motivated to steal sensitive data has not gained any significant advantage with the advent of public LLM’s.

Third Party Data Loss

Third party usage of data is now and will continue to be difficult to track.

While policies and contracts can stipulate that third parties should not use data in public or semi-public LLM’s, there are no controls limiting this exposure. In addition, third-party tools may be silently training a non-public LLM for later use.

Continue to focus on your security programs initiatives driven by measurable results from purple teams within your continuous security testing program, NIST/ISO gap assessments and basics like strong auth, vuln management, and IAM.

THIRD PARTY RISK ASSESSMENTS

Create new standard questions and disseminate them across the organization for third party risk assessments. Review TPA’s to ensure that proper legal protections are in place to minimize unwanted exposure to AI service. This also applies to M&A activity. If M&A includes new data sets, ensure a process is in place to onboard them into the enterprise data set inventory.

MONITORING AND DATA LOSS PREVENTION

Adapt your current detect and block solutions to focus on new AI risks such as blocking unapproved public LLMs, forcing always-on VPN/Proxy to monitor traffic, alerting on large data uploads to known public LLMs, and alerting on training data moving from approved locations.

Understanding Organizational AI Initiatives

- Build Informed Policies: The business-engaged CISO should begin by understanding current and anticipated uses of AI. The goal is to outline and approve policies covering data privacy, ethical use and compliance with regulations. The CISO will not solely be responsible for these policies but must be a leader and stakeholder.

- Collaborate and Integrate Security into the AI pipeline: Following a model similar to their engagement with traditional Development Teams, the CISO should work closely with AI stakeholders for early consideration of AI security policies.

Consuming Publicly Available AI

- Train and Spread Awareness: The CISO should review and update the Security Awareness program with simple guidance and resources for all employees to understand policy and risks with the use of AI and large language models. This includes training employees on how to use AI technologies responsibly and securely. CIOSs should add “deep fake” content to existing phishing awareness training.

- Identify Uses: Gather uses through inquiry then verify and hunt using existing tools such as CASB and EDR to gather data on public AI endpoint traffic volume and the sources generating the traffic.

Protecting Internally Developed AI

- Protect Models: Internally developed AI models can quickly become “crown jewels” of the organization and the same policies and protections that are placed on existing high target data should also be placed on AI models.

- Use “Traditional” Controls: Use existing data loss prevention and identity and access management controls to prevent and alert when unauthorized attempts or anomalous access to the training data or models occur.

Enhancing Incident Response

- Role of Purple Teams: Conduct purple teams that include AI-generated payloads and scripts to understand the effectiveness and limits of controls.

- New TTX Injects: Include AI in the next tabletop exercise scenario and begin to generate an incident response plan for security incidents (deep fakes, data loss, AI model poisoning, etc.)

Enhancing Detection and Response

- Increase SOC Efficiency: Boost SOC agility by integrating AI for rapid SIEM and EDR content prototyping for emerging threats. Use modern SOAR to show analysts full contextual information to enable faster triage and mitigation.

- Reduce Attack Surface: Promote fewer vulnerabilities to production using AI SAST tooling to identify security flaws in source code.

Potential Risks with AI

There are some clear risks that the CISO should also convey to the organization as using public AI becomes more ingrained into the culture:

IP and Content Risks

- Potential Intellectual property (IP) or contractual issues may arise given the lack of approvals necessary to use the data to train or develop the AI models.

- AI may be generating misleading and harmful content caused by low-quality data used to train generative AI models.

Data Loss and Tampering Risks

- AI models create the risk of lost client or internal data as they are entered into the models. We don’t yet know how the public models treat input data and how/if the queries are stored with security in mind.

- Vulnerable to “poisoning” if the data it uses as input is tampered with.

Usage Risks

- Many AI models lack transparency in how they make decisions.

- They can create misinformation and other harmful content. Humans may then pass off AI-generated “hallucinations” or incorrect responses as fact.

AI-Focused Alerts and Blocks for Consideration

| RULE TITLE | DESCRIPTION | LOG/ALERT/BLOCK | PURPLE TEAM TEST CASE |

| High Volume of Data Uploaded to Public LLM | An employee or contractor uploads a large volume of data to a public LLM such as ChatGPT. | ALERT – High (DLP) | Attempt to upload a large data set to an approved Public LLM. |

| Attempt to Access Blocked Public LLM | An employee or contractor attempts to gain access to a public LLM such as ChatGPT that has been blocked. | LOG – Informational (SIEM) | Attempt to access a blocked Public LLM using a corporate asset. |

| Block Unauthorized Public LLM | Block unauthorized Public LLMs at the firewall and/or proxy. | BLOCK (Firewall / Proxy) | Attempt to access a blocked Public LLM using a corporate asset. |

| LLM Training Data has Sensitive Information | Code Repository Scanning tool identifies sensitive data sets within code repositories. The sensitive data sets should be stored outside of the code repository. | ALERT – High (Code Repository Scanner) | Insert benign sensitive data into a code repository. |

| LLM Training Data Potentially Tampered | Code Repository Scanning tool identifies a change to the training data used for internal LLMs outside of the change control window. | ALERT – Medium (Code Repository Scanner) | Make a benign change to the training data set outside of approved change control windows. |

| Copilot Returned Sensitive Information in Query Response | A Copilot response to an employee or contractor query returns sensitive data such as PII or passwords. | ALERT – Medium (SIEM) | Design and execute a query that returns sensitive benign data from a fake data set. |

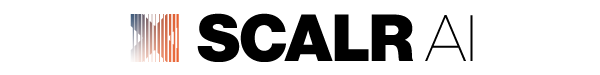

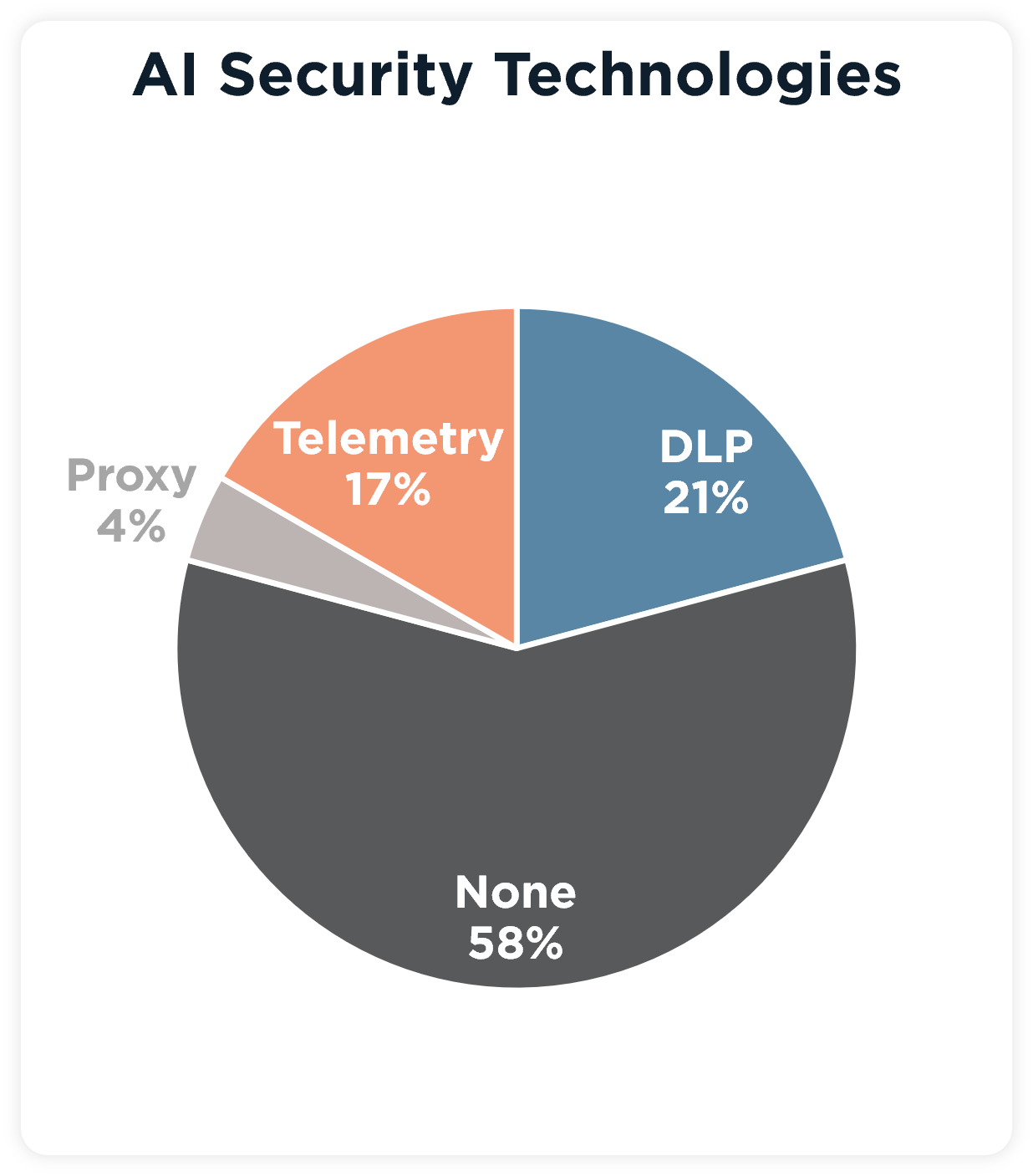

AI Poll Results

Chris Salerno

Chris leads SRA’s 24x7 CyberSOC services. His background is in cybersecurity strategy based on NIST CSF, red and purple teams, improving network defenses, technical penetration testing and web applications.

Prior to shifting his focus to defense and secops, he led hundreds of penetration tests and security assessments and brings that deep expertise to the blue team.

Chris has been a distinguished speaker at BlackHat Arsenal, RSA, B-Sides and SecureWorld.

Prior to Security Risk Advisors, Chris was the lead penetration tester for a Big4 security practice.