TL;DR

This post provides an example of how administrative access to a ManageEngine OpManager application allows [testers] to obtain command execution on underlying OS using the workflow function.

Intro

During external penetration tests, we often come across applications and appliances that are configured with default credentials. Once access is obtained, we attempt to determine if access to additional hosts or the underlying operating system (“OS”) is possible through the application to further our access into an environment.

The Setup

During one of our recent external exercises we discovered an exposed instance of ZOHO’s ManageEngine OpManager, a network performance manager designed to monitor networking devices, servers, and other infrastructure. While we discovered it Internet facing, this can be just as effective on an internal network assessment.

Example login prompt for ManageEngine OpManager. Some instances are kind enough to prepopulate the username/password for you…

After a trivial login “bypass” we found ourselves with access to the console. All the monitored devices only had internal IP addresses and host OS information listed, most of which were network switches. We identified a series of SNMP community strings and accounts that were registered in the application for executing workflow tasks, but there was not enough information to understand what level of access the accounts had to each host. We then discovered the “Workflows” functionality which allows users to create a series of jobs that get executed on the endpoint. Although we did not have a host to run them on, we were able to perform queries to the Postgres database to see if there was anything we could leverage for internal access. Unfortunately, all we found was a listing of all of domain’s registered users. (Note: depending on the version of OpManager, there are exploits that can be used to obtain RCE via the Postgres database, however our instance was patched.)

The Delivery

Stepping back, we knew that the web server hosting OpManager probably had Internet access as it seemed to be in a DMZ, but we did not know the internal IP address for the server, thus we did not have a proper target to run workflows against. This led to a simple exchange between the team:

“We know the host OS. We know the web application has access. What can we do if we don’t have the internal IP address?”

“<in joking tone> How about we just go home?”

After navigating to “Inventory > Add a Device” you can register the localhost as a device. You are not required to select or add any credentials for this registration.

The Punchline

A quick search of all the assets found that there was one address that was not registered in OpManager: 127.0.0.1. Using our access, we were able to register localhost as an asset for monitoring, and from testing we discovered that we did not have to specify an account to manage any of the tasks (unlike when you add a remote host to the inventory). With the registration in place, we could craft our workflows, but we were not sure what user context the commands would execute.

Successful registration of 127.0.0.1!

To remedy this, we created a workflow that would enumerate the user, domain, and hostname and then send the information as part of a POST request to one of our test servers. Because we did not specify an account to use when we registered the asset, we ran under the user context of the application, which ended up being SYSTEM.

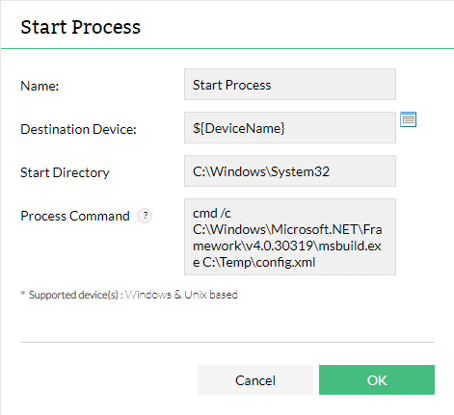

With localhost registered, navigate to “Workflow > Create New Workflow” then drag “Start Process” to the workflow pane. In this instance we opted to output the user, domain and machine name of our lab machine.

Resulting output from our netcat listener in the lab.

Two more workflows were registered and executed, one to download a stageless CobaltStrike beacon and another to run MSBuild to execute it. With that we had our internal access and could move on to further compromise of the domain, leveraging all of the account information we previously collected from the Postgres database.

Subsequent workflow tasks. The first uses PowerShell to download our crafted XML from Cobalt Strike and save it to C:\Temp. The second uses msbuild.exe to execute the file.

Successfully established a beacon from OpManager’s workflow execution in Cobalt Strike as replicated in our lab.

The lesson from this external was, sometimes the answer you need is the most simplistic, and sometimes all you need to do is just go home.

David focuses on Red Team assessments, network penetration testing, and web application testing. He also has experience in forensic analysis and risk and compliance reviews.

David works with companies in many different industries, including financial services, technology, healthcare, entertainment, and energy.

Prior to joining Security Risk Advisors, David was the team lead for the Federal Reserve Bank of Philadelphia’s Information Security Assurance team. His responsibilities included security engineering, risk based technical assessments, incident response, and forensic analysis.